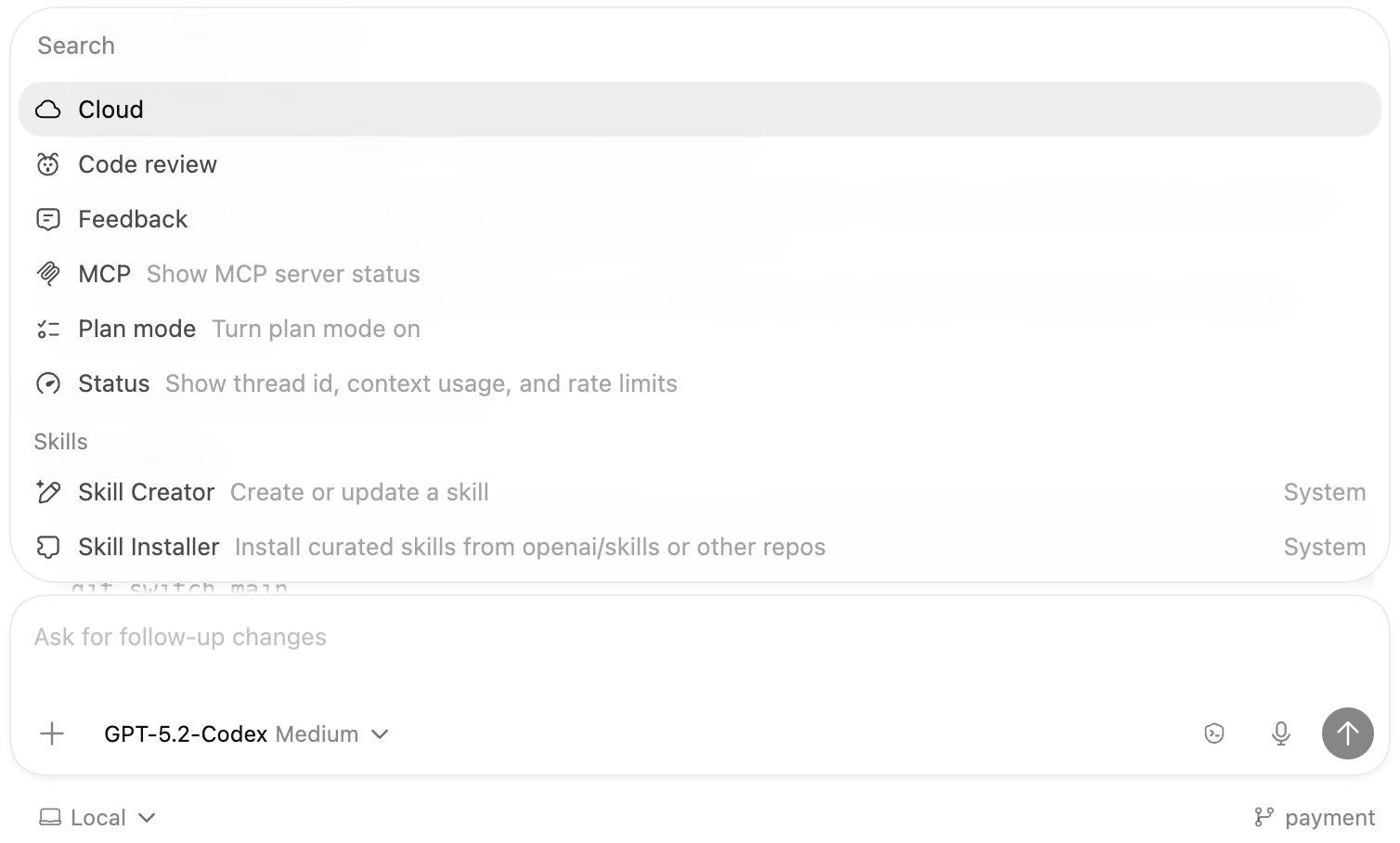

Google recently released a new update for the Antigravity IDE and brought a new Terminal Sandboxing feature for macOS users as of now, and plan to release for Linux soon. In simple terms, they define this as:

Sandboxing provides kernel-level isolation for terminal commands executed by the Agent. When enabled, commands run in a restricted environment with limited file system and network access, protecting your system from unintended modifications.

And I think, it's great and was very much needed. I can now rest assured that nothing outside the current folder is now at risk and I can even safely vibe code by giving full access to the IDE.

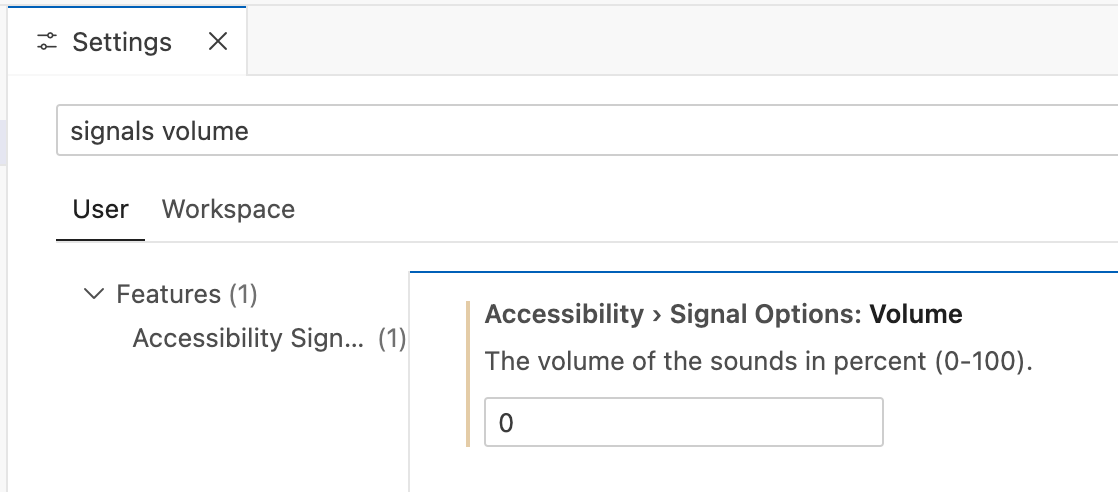

Currently, the option is not turned on by default, so you have to go to the Settings and turn on the toggle for Enable Terminal Sandboxing. On the Terminal Sandboxing docs page, it's also mentioned that:

Sandboxing is currently disabled by default, but this may change in future releases. It is only available on macOS, where it leverages Seatbelt (sandbox-exec), Apple's kernel-level sandboxing mechanism. Linux support is coming soon.

They have much more info about this on the docs page that you should check out.

Update:

The terminal sandboxing feature in Antigravity is annoying as hell, as it constantly asks me to run the following command in my terminal.

sudo chown -R 501:20 "/Users/deepak/.npm"

I am turning this feature off.

And Google needs to test new features thoroughly before releasing it to the public.